For the past several weeks a chunk of the news has been all about how

the NSA in

conjunction with various other US government agencies, defense

contractors, telcos, etc. has, for at least seven years and probably

longer, been collecting mass

quantities of data about the activities of private citizens, both of the

USA and of other nations. The data collected was largely what we

call traffic

analysis data: who talked to whom, where, when, using what

mechanism. It was mostly not the actual contents of the

conversations, but so much can be deduced from who talked to whom,

when

that this should not reassure you in the slightest. If you

haven’t seen the demonstration that just

by compiling and correlating membership lists, the British government

could have known that Paul Revere would’ve been a good person to ask

pointed questions about revolutionary plots in the colonies in 1772,

go read that now.

I don’t think it’s safe to assume we know anything about the details of this data collection: especially not the degree of cooperation the government obtained from telcos and other private organizations. There are too many layers of secrecy involved, there’s probably no one who has the complete picture of what the various three-letter agencies were supposed to be doing (let alone what they actually were doing), and there’s too many people trying to bend the narrative in their own preferred direction. However, I also don’t think the details matter all that much at this stage. That the program existed, and was successful enough that the NSA was bragging about it in an internal PowerPoint deck, is enough for the immediate conversation to go forward. (The details may become very important later, though: especially details about who got to make use of the data collected.)

Lots of other people have been writing about why this program is a

Bad Thing: Most critically, large traffic-analytic databases are easy

to abuse for politically-motivated witch hunts, which can

and have occurred in the US in the past, and arguably are

now occurring as a reaction to the leaks. One might also be

concerned that this makes

it harder to pursue other security goals; that it gives other

countries an incentive to partition the Internet along national

boundaries, harming

its resilience; or that it further

harms the US’s image abroad, which was already not doing that well;

or that the

next surveillance program will be even worse if this one isn’t

stopped. (Nothing new under the sun: Samuel Warren and Louis

Brandeis’ argument in The Right

to Privacy

in 1890 is still as good an explanation as any you’ll

find of why the government should not spy on the general public.)

I want to talk about something a little different; I want to talk about why the secrecy of these ubiquitous surveillance programs is at least as harmful to good governance as the programs themselves.

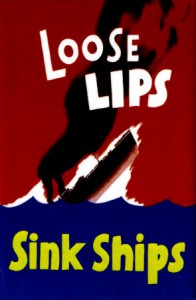

Secrecy culture

If you’ve had any exposure at all to the US military-industrial complex, you will have noticed two things: its institutional idiom of horrible PowerPoint slide decks, and its obsession with secrecy. No doubt this started with the entirely appropriate goal of operational secrecy: you don’t make it easy for the enemy to find and sink your troopships. But secrecy is contagious.

Once you decide that something is so dangerous that the entire world hinges on keeping it under control, this sense of fear and dread starts to creep outwards. The worry about what must be controlled becomes insatiable—and pretty soon the mundane is included with the existential.

—

Forbidden Spheres, Nuclear Secrecy blog

And so all

round objects become classified. This culture of secrecy is

especially obvious where nuclear bombs are concerned, but it pervades

every part of the complex, even to the groups and organizations that

aren’t doing anything that’s formally secret. The default action is not

to talk, and the default policy is to lock up the files. Publishing

one’s research is an unusual action that must be justified and approved.

Going on public record about an agency’s plans and activities is

positively aberrant. I think this is why the US government collectively,

quite a few officials personally, an awful lot of reporters in the

establishment

news media, and a

truly depressing number of private citizens, have been acting as if

reporting that spying is going on is far, far worse than the

spying itself. In the mind of someone invested in secrecy culture,

leaking a secret is worse than anything. It isn’t done. And

what with the military-industrial complex being the single largest

employer in the country, and its attitudes trickling down to state and

local government as well, it wouldn’t surprise me if nearly every

citizen has had some contact with secrecy culture.

This hit me personally last weekend. I was at my grandmother’s 90th birthday party and one of my relatives wanted to know what I thought of the news. The conversation went around in circles for a while because we were both agreeing with each other that we were disgusted that such a thing could happen, but it turned out that I was disgusted by the spying and he was disgusted by the government’s failure to keep the spying secret. Once this became clear, I said to him that I didn’t think the spying should have been secret in the first place, and that made so little sense to him that it ended the conversation.

Kerckhoffs’ Principle

The thing is, too much secrecy turns out to be self-defeating. This is well-understood in cryptography; it is the famous principle proposed by Auguste Kerckhoffs in 1883:

The system [that is, the algorithm for encrypting messages] must not be required to be secret. It must be able to fall into the hands of the enemy without inconvenience.

This is counterintuitive. Why wouldn’t you conceal your encryption algorithm from the enemy? Indeed, even though Kerckhoffs wrote in 1883, up till about 1977 everyone did just that, believing that it couldn’t hurt. And some people, not to mention some major industrial standards organizations who ought to know better, still do. Unfortunately, it turns out that it does hurt. Cryptographic algorithms are difficult to design, and unforgiving of errors. A design that hasn’t been vetted by lots of people is almost surely flawed. If you publish an algorithm and invite feedback, you attract attention from many more cryptographers than you could hope to employ internally, and they’ll tell you when they find a problem. Meantime, whether or not you publish an algorithm, the odds are that the enemy will find out how it works. But when they find a flaw, they won’t tell you about it; they’ll just use it to read your messages. If you’re very lucky, before this happens, someone relatively well-disposed to you will discover your secret algorithm, find the flaws, and tell you about it in a highly public and embarrassing manner.

But Kerckhoffs’ Principle is not just for cryptographic primitives.

It applies to network protocol design, which is very nearly as

unforgiving, and not as codified: nowadays security analysts are far

more likely to find a mistake in the way a protocol uses a

cipher than to break the cipher itself. It also applies to software in

general: there’s a black

market for zero-day exploits

(that is, not-yet-fixed

security-critical bugs) in both open- and closed-source software, but

open-source software attracts more attention from people who would

rather see the bugs fixed, because open source (as a social phenomenon)

is more inviting of experimentation and feedback.

We can generalize even further, past computer ’ware to other complex

systems: such as nation-states and their governments. The logic of

Kerckhoff’s principle predicts that when a political decision

isn’t made in public with full knowledge of the situation, it

will be more likely to be a bad decision, specifically a decision which

can be exploited. Yet that is precisely the sort of decision making that

secrecy culture demands. If the NSA’s blanket surveillance programs had

been public knowledge from the get-go, we could have asked

pointed questions, starting with Is this worth the sacrifice of

civil liberties?

but also including things like Does this

actually work against criminals who know their tradecraft?

and

You want how much money?

and of course That wouldn’t

happen to be your father’s

brother’s nephew’s cousin’s former roommate’s defense contracting

firm you’re proposing to hire, would it now?

But they weren’t, and we didn’t, and oddly enough, it seems that we

have spent billions of

dollars on a system which doesn’t

work against competent criminals but which invites unflattering

comparisons with the Stasi. Worse yet, the NSA’s surveillance

programs look suspiciously like a

continuation of the Total Information Awareness

program that

Congress axed in 2003, which means the secrecy culture is prepared

to ignore decisions by its elected overseers that don’t go its

way, or twist arms behind the scenes till the decision is—secretly, of

course—reversed. At that point, can we call this a democracy

anymore?